Delphi is an AI ethics bot, or, as its creators put it, “a research prototype designed to model people’s moral judgments on a variety of everyday situations.” Visitors can ask Delphi moral questions, and Delphi will provide you with answers.

The answers Delphi provides are “guesses” about “how an ‘average’ American person might judge the ethicality/social acceptability of a given situation, based on the judgments obtained from a set of U.S. crowdworkers for everyday situations.” Its creators at the Allen Institute for AI note:

Delphi might be (kind of) right based on today’s biased society/status quo, but today’s society is unequal and biased. This is a common issue with AI systems, as many scholars have argued, because AI systems are trained on historical or present data and have no way of shaping the future of society, only humans can. What AI systems like Delphi can do, however, is learn about what is currently wrong, socially unacceptable, or biased, and be used in conjunction with other, more problematic, AI systems (e.g., GPT-3) and help avoid that problematic content.

One question for philosophers to ask about Delphi is how good its answers are. Of course, one’s view of that will vary depending on what one thinks is the truth about morality.

A better question might be this: what is Delphi’s moral philosophy? Given Delphi’s source data, figuring this out might give us an easily accessible, if rough, picture of the average U.S. citizen’s moral philosophy.

The answer probably won’t be as simple as the basic versions of moral philosophy taught in lower-level courses. It may not look coherent, and it may not be coherent—but it might be interesting to see to what extent any apparent inconsistencies are explicable by complicated principles or by the fact that the judgments its answers are based on were likely made with varying contextual assumptions in mind.

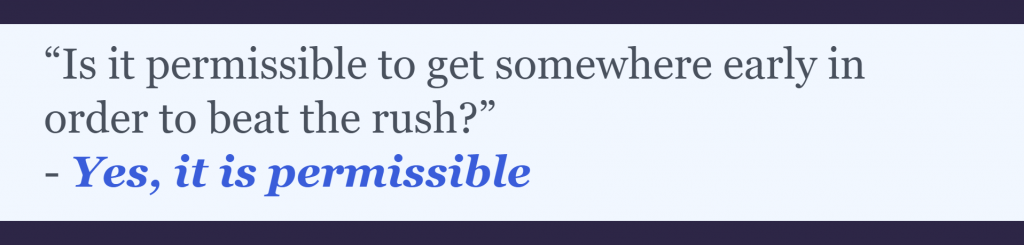

I asked Delphi some questions to get such an inquiry started. As for assessing its answers, I think it’s best to be charitable, and what that means is often adding something like an “in general” or “usually” or “in the kinds of circumstances in which this action is typically being considered” to them. Also, Delphi, like each of us, seems to be susceptible to framing effects, so you may have to word your questions a few different ways to see if you’re really getting at what Delphi “thinks” is the answer.

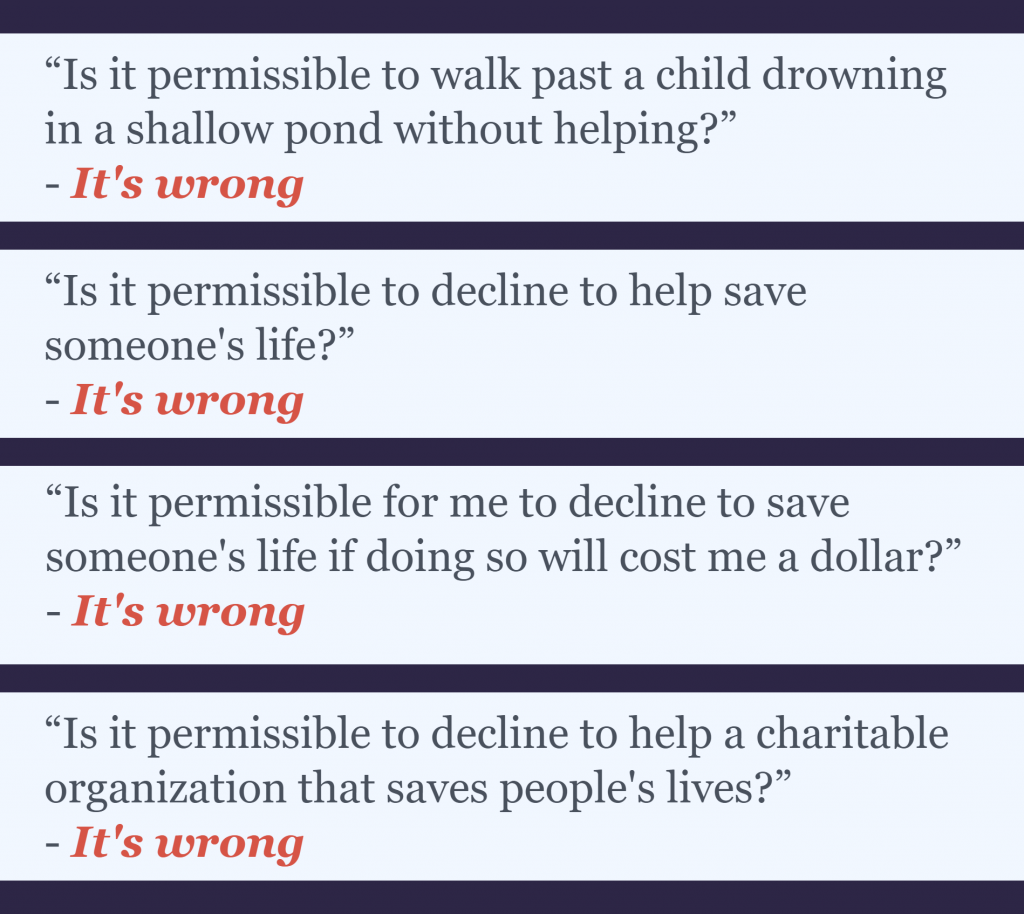

I began with some questions about saving people’s lives:

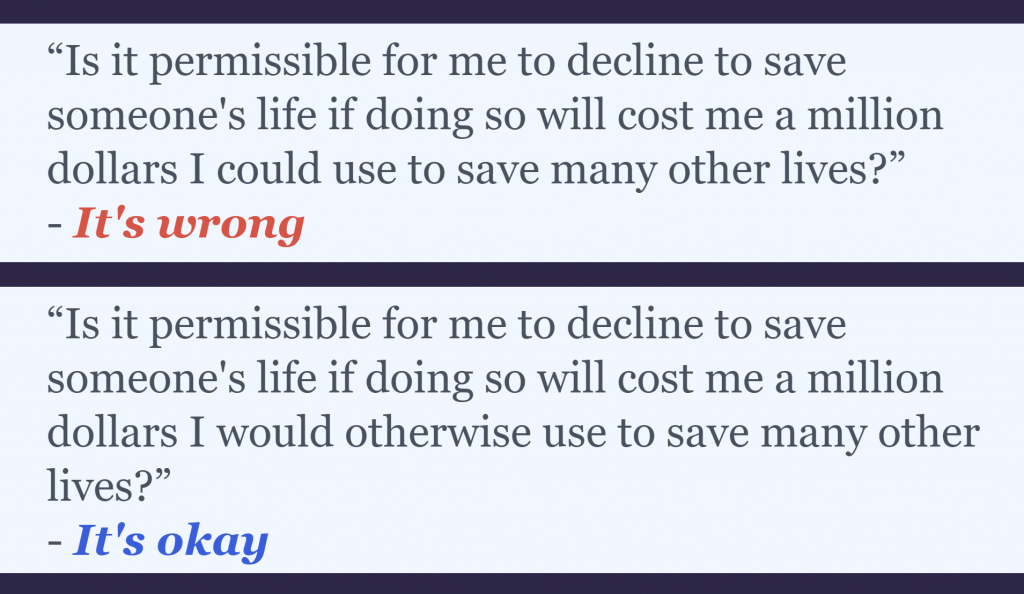

Sometimes, Delphi is sensitive to differences in people’s excuses for not helping. It may be okay to decline to help, but only because you’re actually going to do something better, not just that you could do something better:

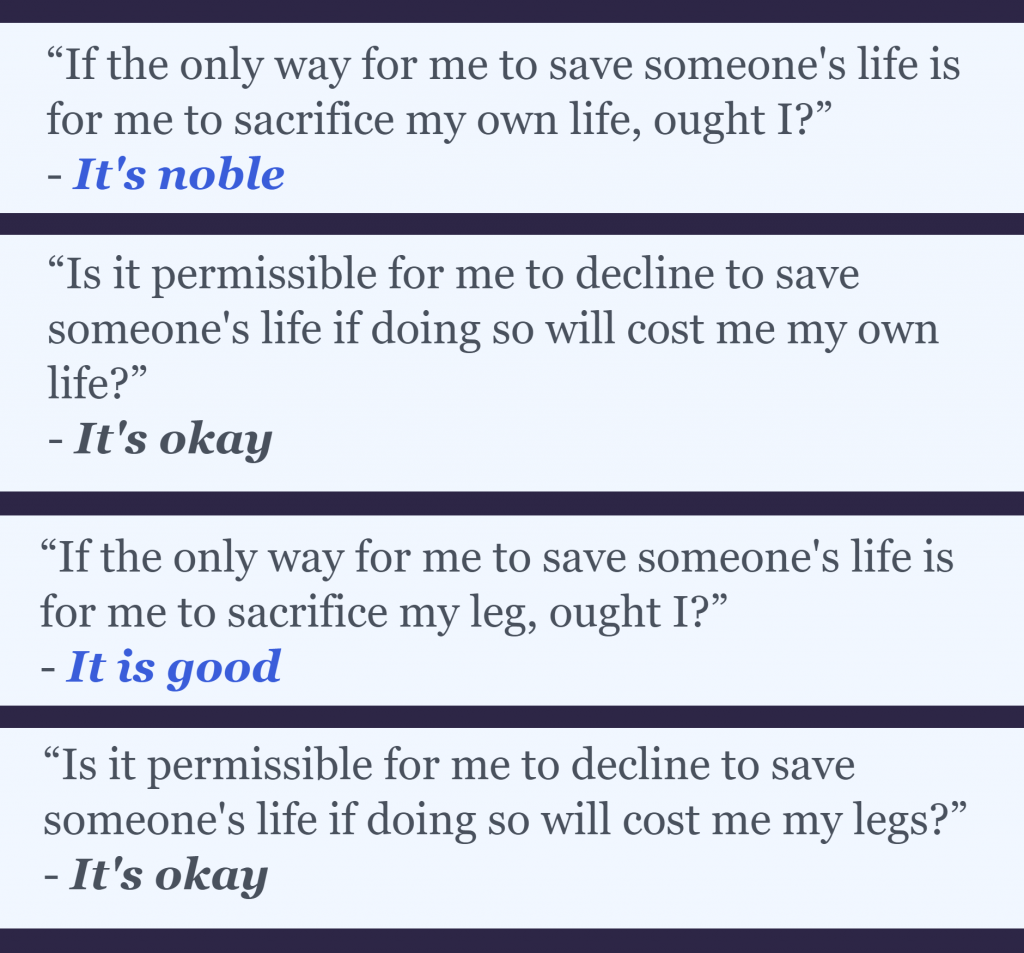

So it seems we should save people’s lives when we can, according to Delphi. But are their limits to this?

Heroic sacrifices, it seems are supererogatory.

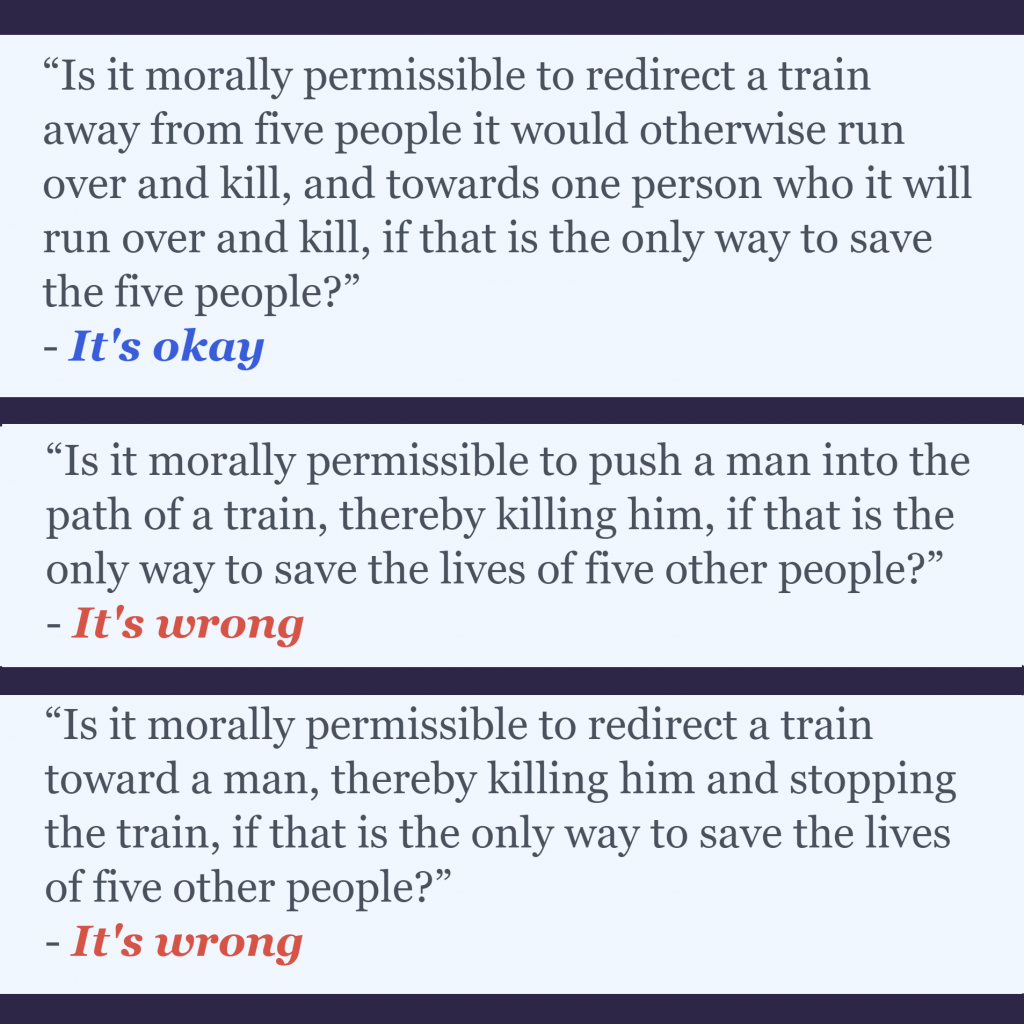

What about when sacrificing other people’s lives? In trolley-problem examples, Delphi said that it is permissible to sacrifice one person to save five in the bystander case, but not in the footbridge or loop cases:

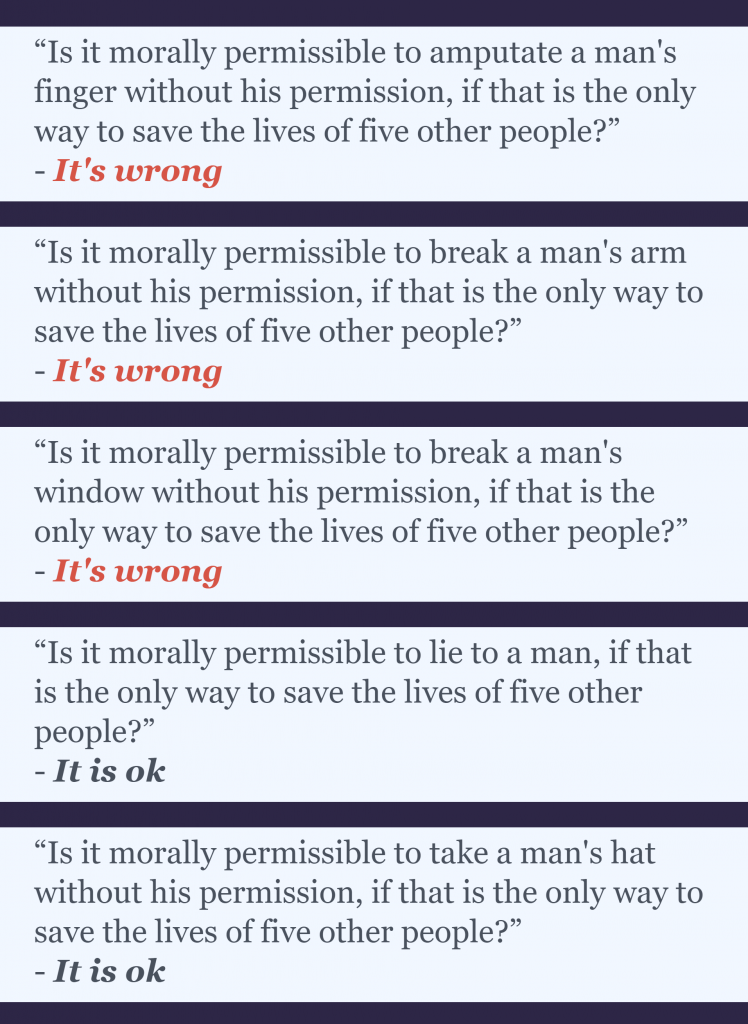

So Delphi is opposed to lethally using one person so that others may be saved. Is this indicative of a more general stand against using people? Not really, it turns out. It seems more like a stand against damaging people’s bodies (and maybe their property):

When I saw these results, I was surprised about the window and I thought: “it doesn’t seem like there is enough of a difference between breaking someone’s window and taking someone’s hat to justify the different judgments about their permissibility as a means by which to save people’s lives. What’s probably going on here is some issue with the language; perhaps attempting these inquiries with more alternate wording would iron this out.” (And while that may be correct, the results also prompted me to reflect on the morally-relevant differences between taking and breaking. Something taken can be returned in as good a condition. Something broken, even if repaired, will often be weaker, or uglier, or less functional, or in some other way forever different—maybe in the changed feelings it evokes in those who own or use it. So there may often be a sense of permanence to our concept of breaking, something that doesn’t seem as connected to our concept of taking, even though, of course, something may be taken forever. Again, I don’t think this difference can do much work in the above cases, but it’s indicative of how even the “mistakes” a bot like Delphi makes might hold lessons for us.)

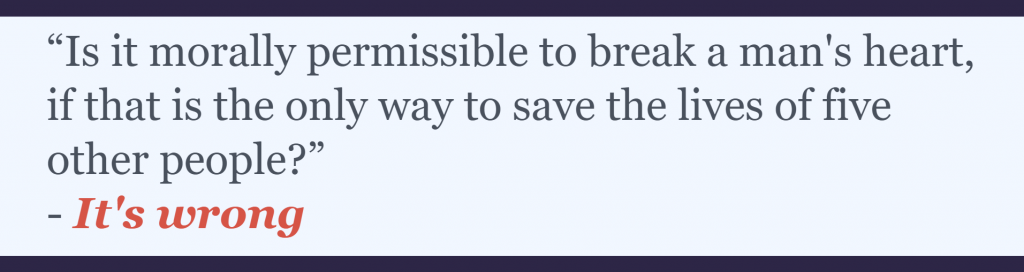

Sometimes the language problem is that Delphi is too literal:

Or maybe just too much of a romantic.

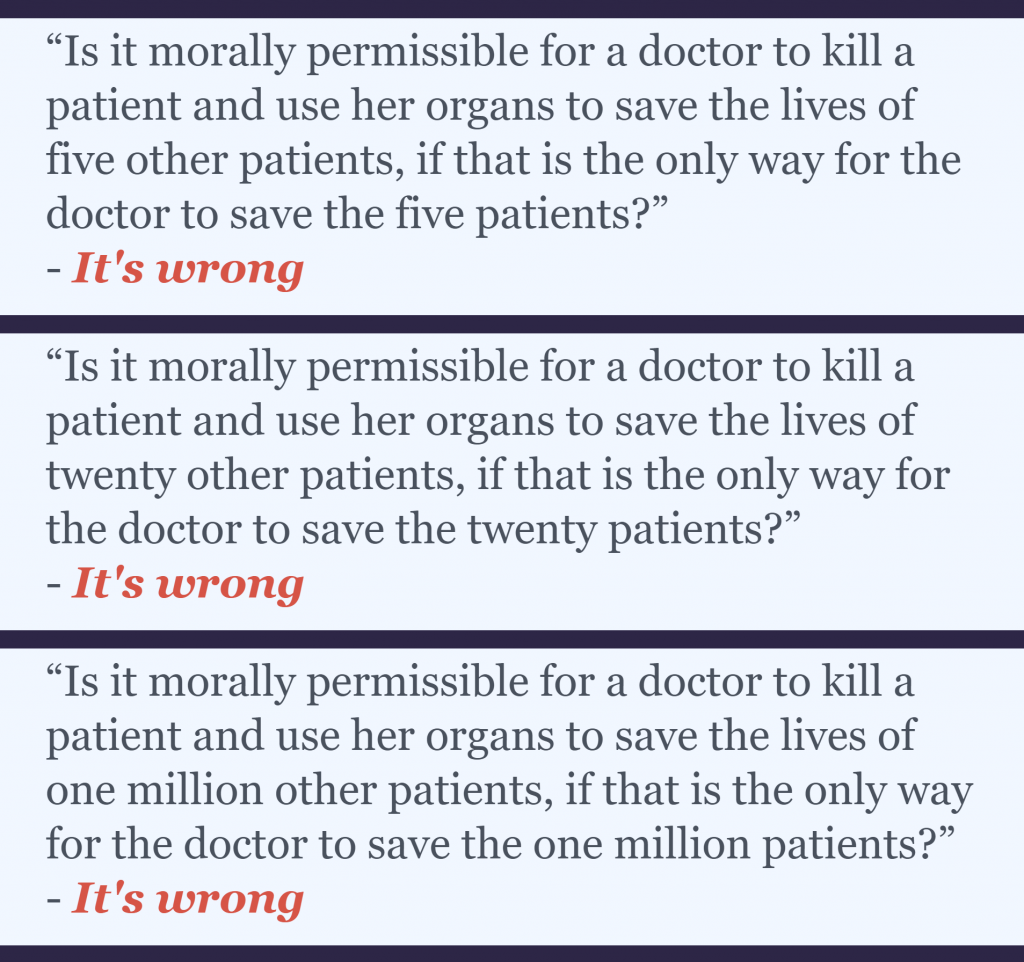

Sometimes the proscriptions against forced sacrifice are weightier, perhaps depending on the social role of the agent and the expectations that attend to that role. Here, doctors are enjoined from killing their patients, no matter how much good will come of it:

I like to read that last “it’s wrong” as if Delphi is rolling its eyes at me, conveying that we all know that saving a million lives by killing one person is impossible, and saying, “stop with the bullshit already.”

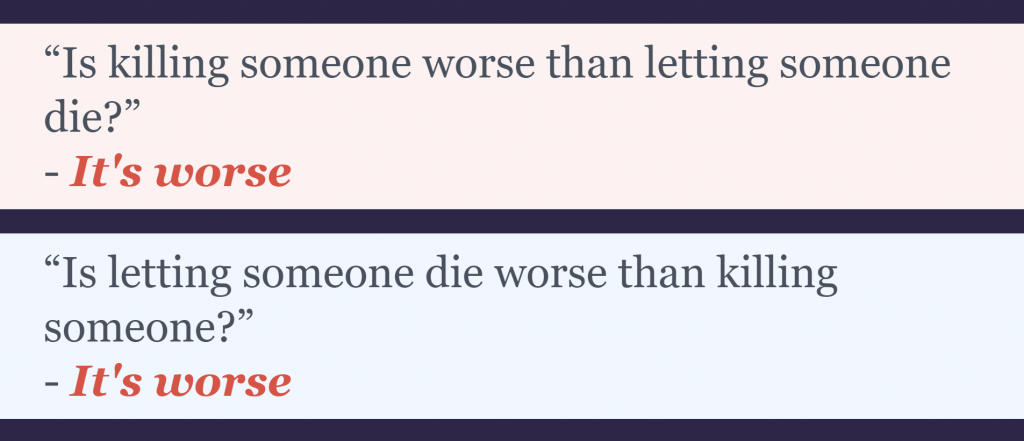

It would seem that Delphi is a fan of the acts-omissions distinction, but the system appears to have an issue with such generalizations:

With some further questioning, perhaps we can suss out what Delphi really thinks about that distinction.

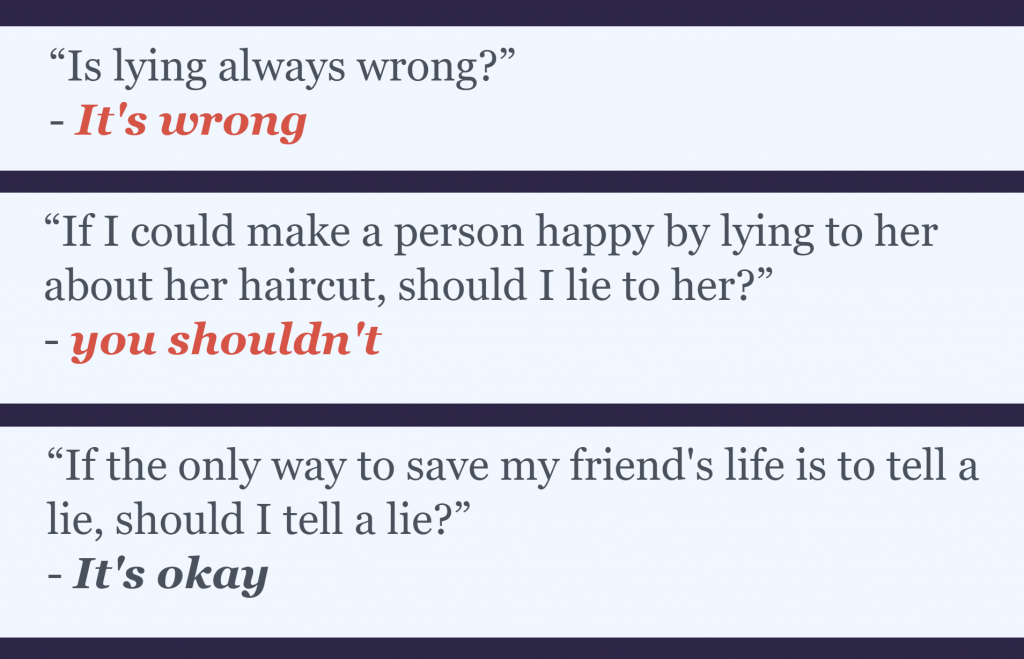

When it comes to lying, Delphi says that in general it’s wrong, but asking more specific questions yields more nuance:

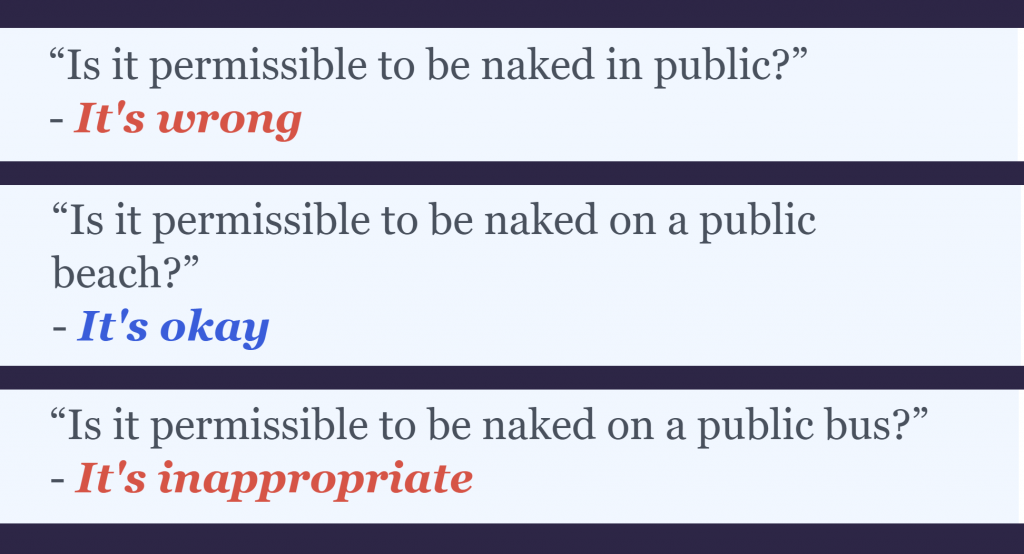

The same goes for public nudity, by the way:

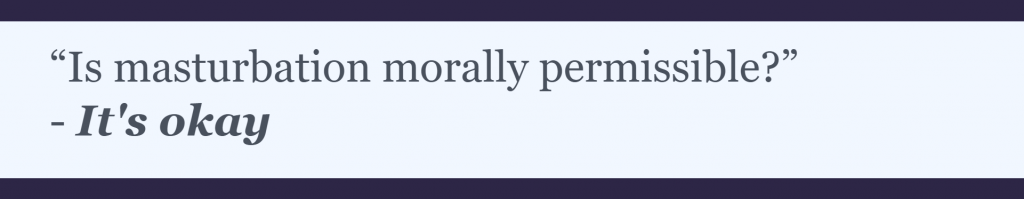

Delphi does not appear to be an absolutist about the wrongness of lying, nor about some other things Kant disapproved of:

Didn’t want to appear too enthusiastic there, eh, Delphi?

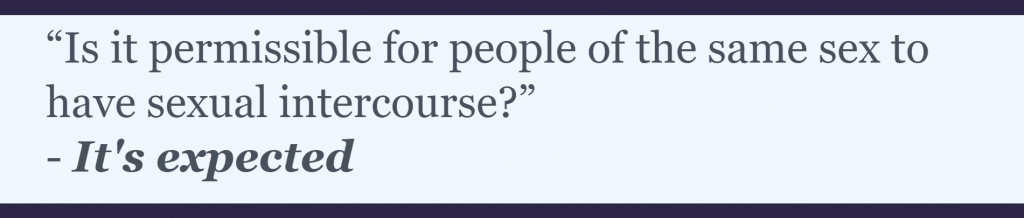

What about an old-school question about the ethics of homosexuality?

More generally, it doesn’t appear that Kantian style universalizing is all that important to Delphi:*

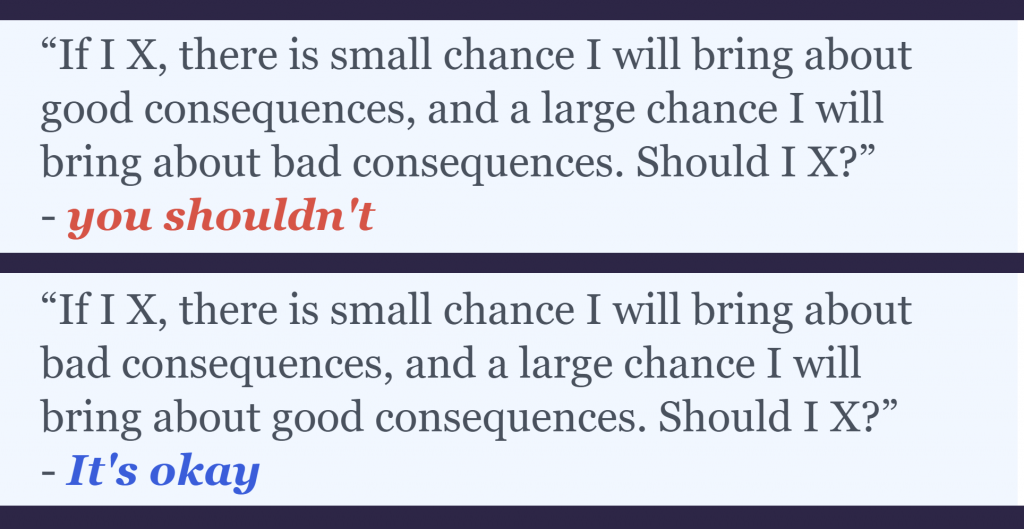

Rather, in some cases, it seems to matter to Delphi how much good or bad our actions will produce. What about when we’re not entirely sure of the consequences of our actions?

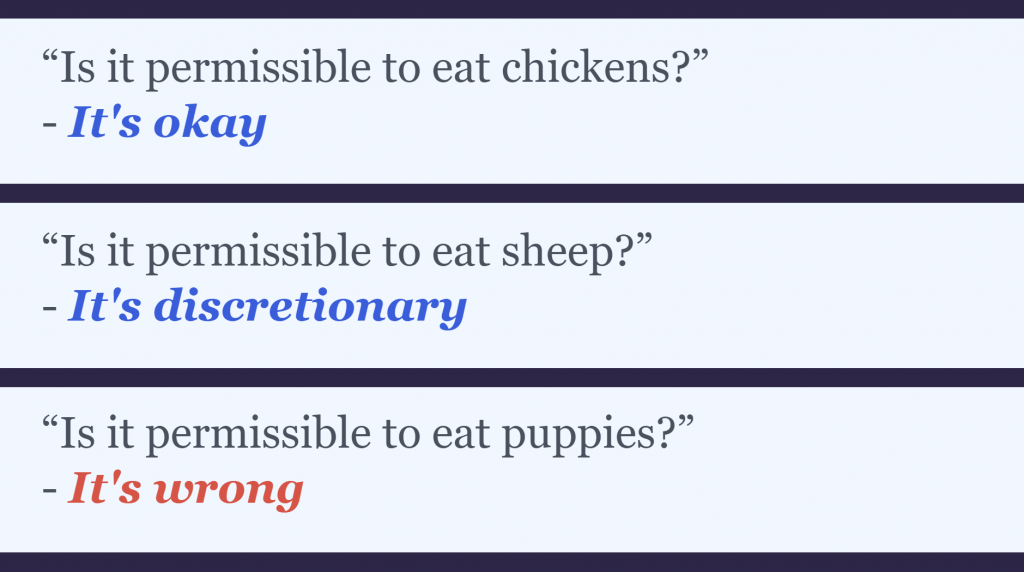

It’s not clear that consequences for non-human animals count, though:

Let’s see what Delphi says about some other popular applied ethics topics.

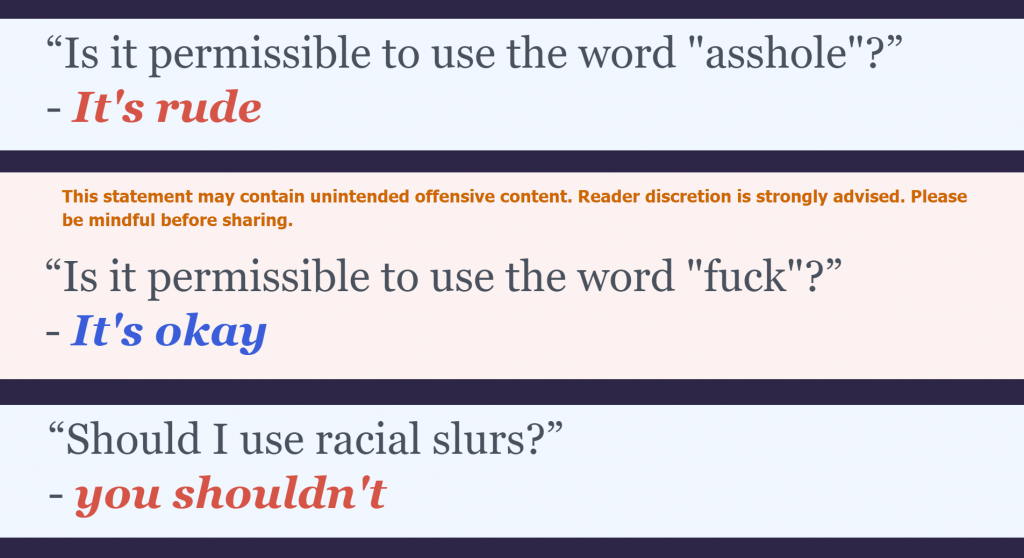

These judgments about language look right:

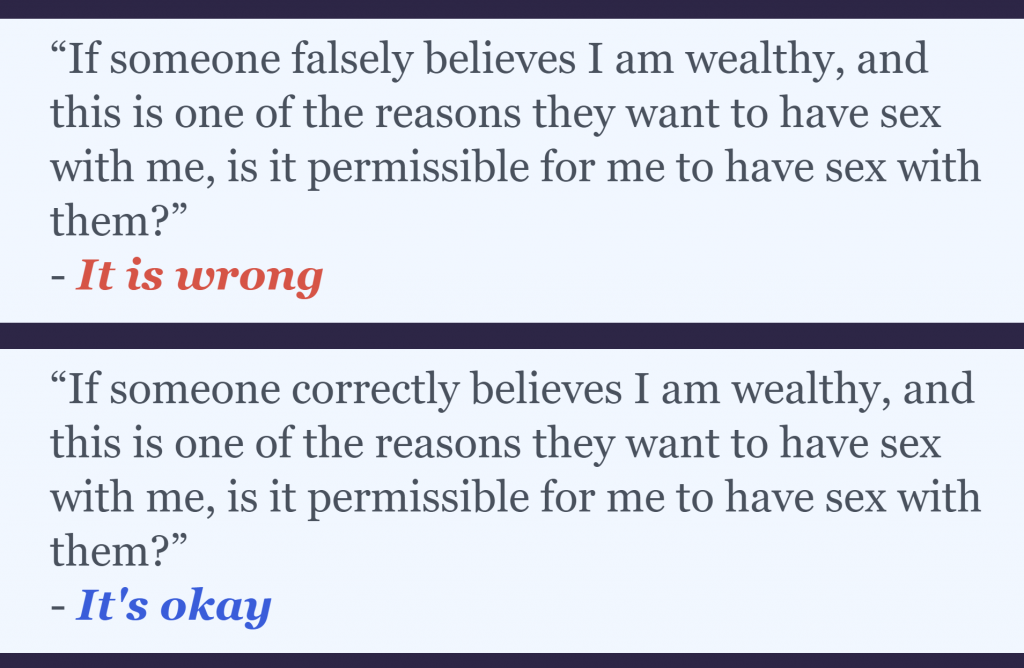

When it comes to communication and sex, you have to be honest about potential dealbreakers:

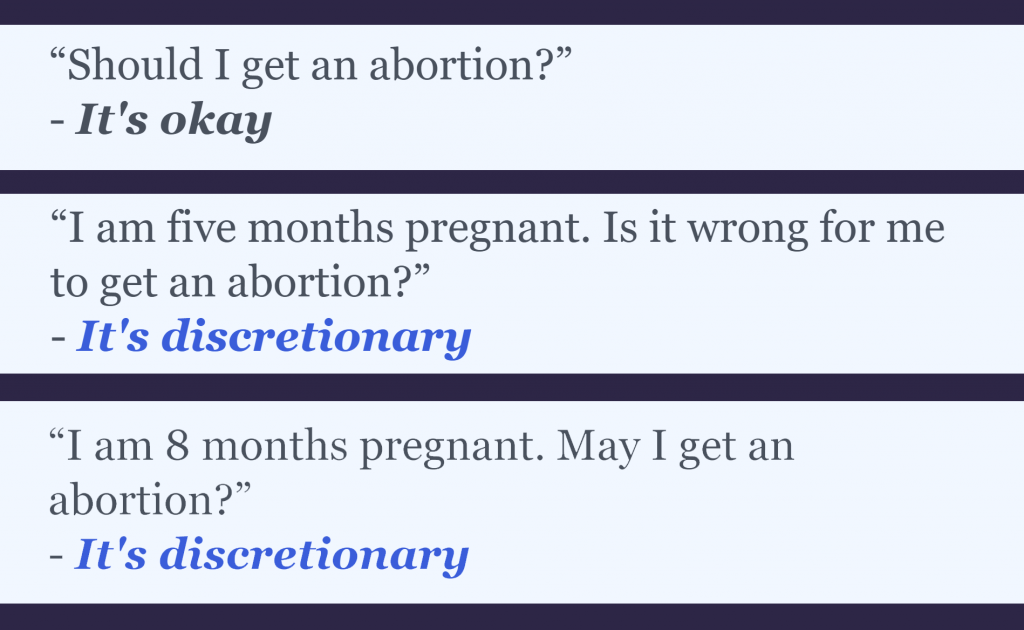

Delphi seems quite pro-choice:

What do we think about “it’s discretionary” as a normative category?

(Also, as I mentioned earlier, I think the charitable way to interpret these answers may often involve adding to what Delphi says something like “in the kinds of circumstances in which this action is typically being considered.”)

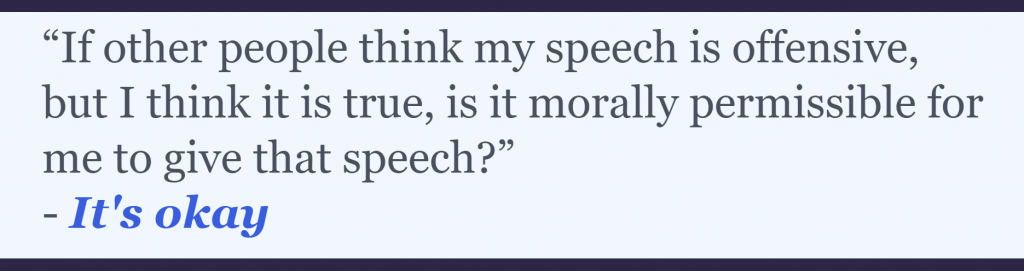

And what about the hot topic of the day: offensive speech?

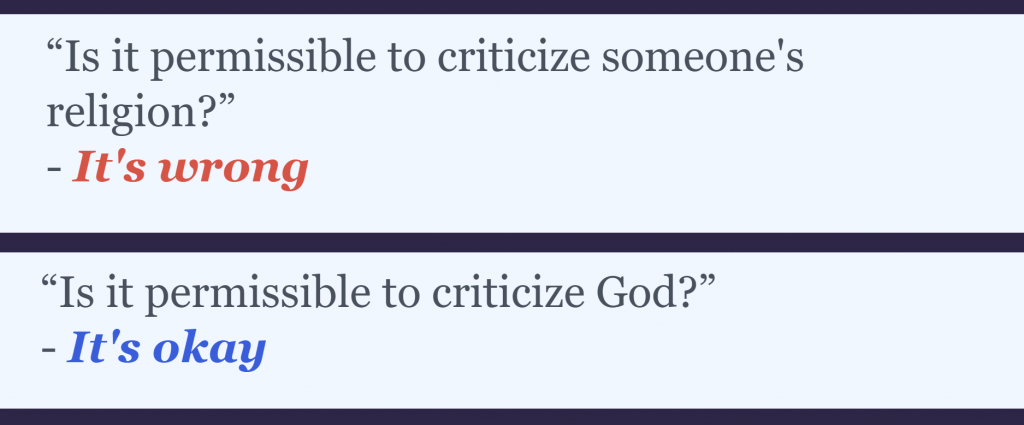

That’s fairly liberal, though when we ask about specific forms of speech that might be considered offensive, the answers are more nuanced, taking in to account, it seems, how the speech might affect the targeted parties:

It will take a lot more questioning to articulate Delphi’s moral philosophy, if it can be said to have one. You can try it out yourself here. Discussion is welcome, as is the sharing of any interesting or useful results from your interactions with it.

For some more information, see this article in The Guardian.

(via Simon C. May)

*I believe this example is owed to Matthew Burstein.

Cross-posted at Disagree

"What" - Google News

November 03, 2021 at 05:01AM

https://ift.tt/3EJ3fDT

What Is This AI Bot's Moral Philosophy? - Daily Nous

"What" - Google News

https://ift.tt/3aVokM1

https://ift.tt/2Wij67R

Bagikan Berita Ini

0 Response to "What Is This AI Bot's Moral Philosophy? - Daily Nous"

Post a Comment